Data backup in the Backup Manager is session-based. A session is a process during which a data selection on a client device is backed up to a remote server. Backup sessions result in the creation of virtual copies that can be retrieved at any time.

Because data tends to change, backup sessions usually run on a repeated basis (for instance, every day). You can start a new backup session manually or you can create a schedule for it.

1 data source = 1 backup session

There is a separate session for each data source. For example, if a backup selection has two data sources (Files and folders and System state), there will be two backup sessions.

Backup session structure

All backup sessions go through two stages: scanning and processing. The procedure varies slightly for initial sessions (performed on a device for the first time) and subsequent sessions (all other sessions after the initial one).

Stage 1: Scanning

At this stage, the Backup Manager makes the list of data to back up. In short, here is what it does:

- Performs a system scan to locate the data selected for backup

- Puts the files in a queue

Stage 2: Processing

The Backup Manager takes the queued files one by one in the order of priority (user-defined) and recentness (defined by change dates) and handles them in the following way:

- Cuts them into smaller fragments known as slices

- Calculates a hash (a unique data fingerprint) for each slice. During further backups, the hash will be used to detect changes

- Compresses the slices to reduce their size (saves bandwidth and storage space)

- Encrypts the slices using the private Encryption Key/Security Code or Passphrase set during Backup Manager installation (the data will be inaccessible without the key)

- Combines multiple slices into cabinets to utilize network and storage resources more efficiently

- Transfers the cabinets to the Cloud. By default, 4 simultaneous connections are established. Their number can be customized using the

SynchronizationThreadCountparameter in the advanced settings. The higher the number of connections, the faster your data will reach the storage. However, a high number of connections consumes more bandwidth and memory resources - Registers all files and slices in a local database called the Backup Register and uploads the database to the Cloud

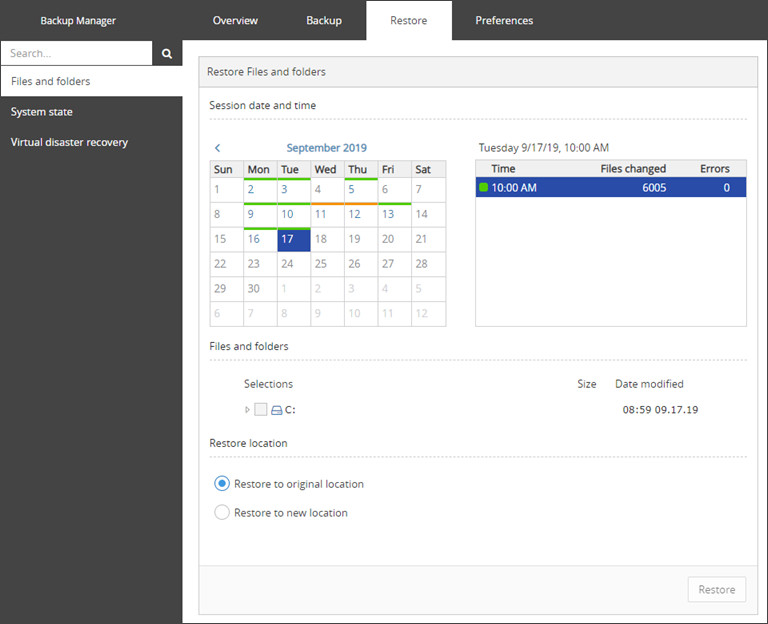

When the backup session is complete, the data becomes available for recovery. You can see a new entry in the calendar on the Restore tab.

Stage 1: Scanning (only for non-accelerated backups)

The Backup Manager scans the system to find the data to back up and compares the results with those from the previous session. The comparison is drawn based on file attributes (names, change dates, size, access permissions and so on) stored in the Backup Register. If at least one attribute in a file has changed, the file is added to the queue for further processing.

If a session is powered by the Backup Accelerator, the scanning stage is missing. The Backup Manager downloads the list of changes from the cloud and gets straight to processing.

Stage 2: Processing

The Backup Manager slices the files in the order of priority and recentness and calculates hashes for the slices. Then the hashes are compared to the hashes from the previous backup session. New slices whose hashes do not have matches in the storage undergo the standard procedure. They are compressed, encrypted, combined into cabinets and delivered to the storage. The Backup Register is updated with new records after that.

Sequence of backup sessions

Backup sessions run successively. You can start a new session after the current one is complete (the Run backup button is unavailable while there is an active backup process).

Backup sessions can sometimes overlap (for example, if they were scheduled one shortly after another or if one session had been started manually just before a scheduled session was due). If this happens, the session that started first is completed normally. Further sessions that started while it was in progress are processed depending on their type.

- If the sessions belong to the same data source, the later sessions are skipped. The next backup takes place according to the active schedule (skipped sessions are not re-run)

- If the sessions belong to different data sources, the later sessions are put into a queue. They will be processed later, when the current session is complete

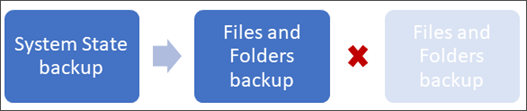

- If a queue contains a backup session for a certain data source, all newer sessions for that data source are skipped. Let's say, a System State backup is in progress. In the meanwhile, a Files and Folders backup is initiated. The Backup Manager adds Files and Folders to the queue. Then another Files and Folders backup is started on schedule which gets skipped. Users may get an impression that the Files and Folders backup got skipped without a reason, but that is because they cannot view the queue that already contains the data source

Practical implications

- Backup Manager users needn't make any preparations for backup: move the files to a separate folder, compress them or check for duplicates

- Data sent to storage is always unique in the sense that only changed blocks are transferred and only one copy of each change is submitted. This guarantees the use of bandwidth and storage space economically

- If canceling a backup, the data already sent prior to the cancellation will stay in the cloud until it passes the retention period of the device. This data will not need to be sent again

- When the Backup Manager searches for changes, it compares file properties first. Only if a property has changed, a content analysis is made. This drastically reduces the amount of time and processing required for change detection, especially when it comes to large data sets

- To speed up backup sessions, you can enable the Backup Accelerator (Windows only) or increase the number of simultaneous connections